LangGraph has given developers the power to build AI agents that can reason, plan, and even correct their own mistakes. These agents are not just simple input-output bots; they operate in cycles, much like a human mind thinking through a problem.

But what happens when the next logical step for your agent is to talk to someone? This is where the agent often hits a wall, trapped in a silent, text-based world. The solution is a powerful bridge to the real world: VoIP Calling API Integration for LangGraph.

Imagine an AI agent that can do more than just process information. Imagine it can call a customer to troubleshoot an issue, patiently trying different solutions in a loop until the problem is solved. This is not a distant dream.

By giving your sophisticated LangGraph agent a voice, you transform it from a theoretical problem solver into a practical, real world actor. This guide will explain exactly how you can make that happen.

Table of contents

- What is LangGraph? Beyond Simple Chains

- Why Does LangGraph Agent Need a Voice?

- How Does VoIP Calling API Integration for LangGraph Work?

- Why is FreJun AI an Ideal Voice Infrastructure?

- Real World Use Cases for Voice-Enabled LangGraph Agents

- Conclusion: Giving Your Smart Agent a Powerful Voice

- Frequently Asked Questions (FAQs)

What is LangGraph? Beyond Simple Chains

To understand why voice is such a game-changer, we first need to appreciate what makes LangGraph special. LangGraph is a library from the popular LangChain ecosystem that lets you build stateful, multi-actor applications with Large Language Models (LLMs).

If a standard LangChain “chain” is like following a recipe step by step in a straight line, LangGraph is like being a professional chef. A chef can taste the soup, decide it needs more salt, and go back a step to add it. They can even decide the soup is not working and switch to making a salad instead.

LangGraph allows for this by creating applications as a graph. Instead of a straight line, you have:

- Nodes: These are the “steps” or functions your agent can perform, like calling a tool or asking the LLM to think.

- Edges: These are the connections that decide which node to go to next based on the current situation.

- State: This is the memory of the agent. It is a central object that gets passed between nodes, so the agent always knows the full context of what has happened.

This structure allows agents to work in cycles, giving them the ability to loop, re-evaluate information, and make more complex decisions.

Also Read: How To Use RAG With Voice Agents For Accuracy?

Why Does LangGraph Agent Need a Voice?

A LangGraph agent can be brilliant at managing its internal state. It can decide that it needs more information from a human to proceed with a task. But how does it get that information? A chat window is one option, but for many real world business processes, the answer is a phone call.

Without a voice, your agent cannot:

- Provide proactive customer support over the phone.

- Schedule an appointment with a clinic that only takes phone calls.

- Qualify a sales lead through a natural, interactive conversation.

This is where Voice over Internet Protocol (VoIP) comes in. A VoIP API is a service that allows your software application to make and receive phone calls over the internet.

A VoIP Calling API Integration for LangGraph is the critical link that connects your agent’s complex digital brain to the global telephone network.

How Does VoIP Calling API Integration for LangGraph Work?

Connecting a voice to your LangGraph agent might seem daunting, but modern voice platforms make the process surprisingly manageable. Let’s break down the components and the flow of a real conversation.

The Core Components of a Voice Enabled LangGraph Agent

You need four key pieces working together in perfect harmony:

- The LangGraph Application (The Brain): This is the agent you have built. It manages the state, contains the logic in its nodes, and decides what to do next.

- The Voice Infrastructure (The Mouth and Ears): This is your VoIP API provider. It handles the messy, complex work of managing the phone call, dealing with telephony protocols, and streaming audio in real time.

- Speech to Text (STT): An AI service that listens to the audio from the human and transcribes it into text that your LangGraph agent can understand.

- Text to Speech (TTS): An AI service that takes the text response from your agent and converts it into natural-sounding spoken words.

Also Read: Elevenlabs.io vs Deepgram.com: Feature by Feature Comparison for AI Voice Agents

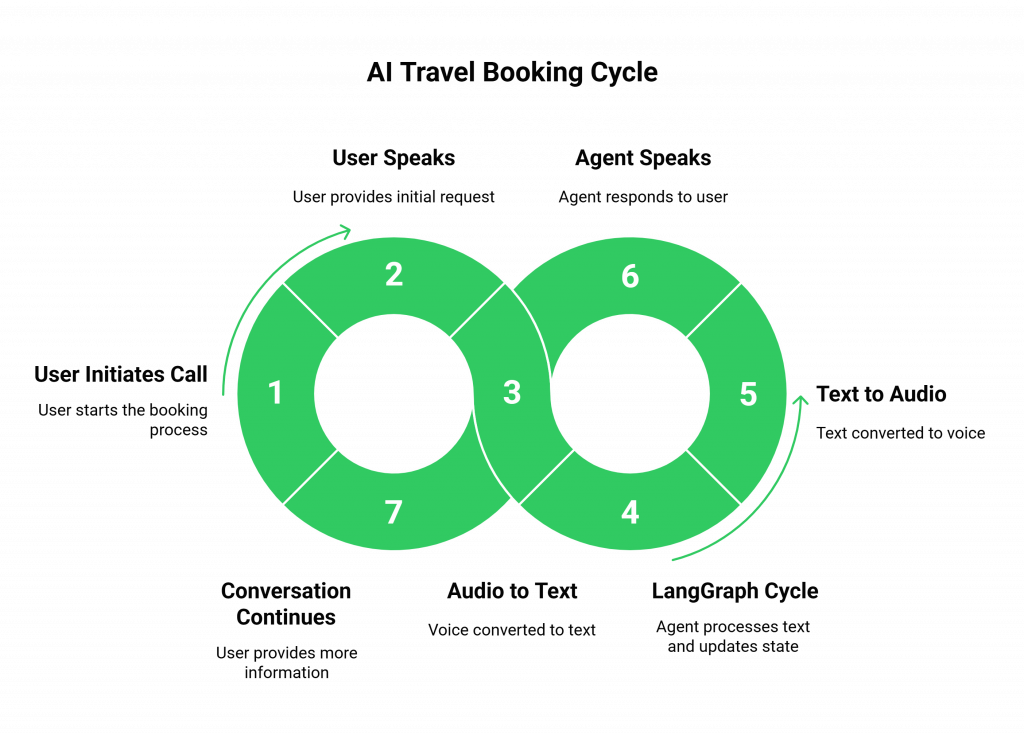

A Step-by-Step Conversational Flow

Let’s use an example: an AI travel agent built with LangGraph is tasked with booking a flight for a user.

- The Call Begins: The user calls a number connected to your application. The VoIP API answers the call and immediately opens a two-way, real-time audio stream.

- The User Speaks: The user says, “Hi, I need to book a flight to London.”

- Audio to Text: The VoIP platform streams the user’s voice to your chosen STT service, which instantly converts it into text: “Hi, I need to book a flight to London.”

- The LangGraph Cycle: This text is passed as input to your LangGraph agent. It enters the first node. The agent’s state is updated (e.g., {“destination”: “London”}). The agent’s logic, likely an LLM call, determines that it needs more information to proceed (the travel date).

- Text to Audio: The agent generates its text response: “I can help with that. What date would you like to depart?” This text is sent to your TTS service, which creates an audio file of the spoken words.

- The Agent Speaks: The VoIP platform streams this audio back to the user over the phone line, all within a fraction of a second.

- The Conversation Continues: The user’s answer (“This Friday”) goes right back to step 2, and the cycle continues. The LangGraph agent keeps updating its state with each turn until it has all the information it needs to use a tool and book the flight.

This continuous loop is what makes a VoIP Calling API Integration for LangGraph so powerful. The agent is not just following a script; it is actively managing a stateful conversation.

Also Read: How Does VoIP Calling API Integration for Yellow AI Improve Communication?

Why is FreJun AI an Ideal Voice Infrastructure?

While LangGraph provides the brain, you need a robust nervous system to connect that brain to a voice. This is exactly where FreJun AI fits in. We are not an LLM or an AI modeling platform; we are the specialized voice infrastructure that handles the complex telephony layer so you can focus on building your AI.

Our entire platform is engineered for the ultra-low latency that real-time conversations demand. FreJun AI provides real-time audio streaming, manages the telephony connections, and gives you developer-first SDKs to make the integration seamless.

We are the foundational “plumbing” that reliably connects your brilliant LangGraph agent to the outside world.

Real World Use Cases for Voice-Enabled LangGraph Agents

Once you have a successful VoIP Calling API Integration for LangGraph, the possibilities are immense.

- Advanced Customer Service Agents: An agent can guide a user through a multi-step troubleshooting process. If the first solution fails, the agent’s graph logic can loop back, consult its tools for another solution, and try again, all within the same phone call.

- Autonomous Appointment Schedulers: The agent can call a doctor’s office. If the requested time is unavailable, it can cycle back to its logic, check the user’s alternative calendar slots, and propose a new time, continuing the conversation fluidly.

- Dynamic Market Research Bots: An agent can conduct a phone survey. Based on a user’s answer, a conditional edge in the graph can lead to a completely different set of follow-up questions, making the survey feel adaptive and intelligent.

Conclusion: Giving Your Smart Agent a Powerful Voice

LangGraph provides the framework to build AI agents with memory and reason. It allows them to think in cycles, to plan, and to use tools in a way that mimics intelligent problem solving. But without a voice, even the smartest agent is limited. The VoIP Calling API Integration for LangGraph is the final, essential step to unleashing its full potential.

By combining the stateful, cyclic logic of LangGraph with the real-time, low-latency communication of a dedicated voice infrastructure, you can build the next generation of autonomous agents that truly operate in the human world.

The future of AI is not just about thinking; it’s about doing. And for many tasks, doing starts with a simple phone call. The power of a seamless VoIP Calling API Integration for LangGraph makes that future a reality today.

Also Read: What is SIP Trunking? A Complete Beginner’s Guide

Frequently Asked Questions (FAQs)

LangGraph is a tool for developers to build AI applications where the agent can think in loops or cycles. Instead of just going from step A to B to C, it can go from A to B, then decide to go back to A or jump to step Z, making it much smarter and more flexible than a simple chatbot.

Standard chatbots are often stateless; they forget the context of the previous turn. LangGraph is built around a “state” object, meaning it remembers everything that has happened in the conversation. This is essential for a natural, multi turn phone call where context is key.

The VoIP API is the bridge to the telephone network. It handles the jobs of making or receiving the call, converting audio to a digital stream that your code can use, and playing audio back to the human user, all in real time.

High latency creates awkward pauses in the conversation. If the agent takes two or three seconds to respond, the conversation feels unnatural and frustrating, and the user will likely hang up. Ultra low latency is the most critical factor for a successful voice agent.

No, and you should not. The best approach is to use high-quality STT and TTS services from specialized providers via their APIs. A model-agnostic voice infrastructure allows you to plug in the best services for your specific needs.