Building real-time voice AI is no longer about proving it can work – it’s about making it feel natural. The biggest barrier is Latency. Even the smartest AI agent sounds robotic if replies take seconds to arrive.

This blog explores how to lower latency in voice AI conversations, breaking down each stage of the pipeline from speech-to-text to network transport. Along the way, we’ll highlight practical methods teams can use to reach sub-second interactions.

For product builders comparing the top programmable voice AI APIs with low latency, or engineering teams searching for a reliable voice API for developers, this guide offers a clear, technical roadmap.

What Does Latency Mean in Voice AI Conversations?

In simple terms, latency is the delay between a person finishing their sentence and hearing the first reply from the AI. A study on natural spoken conversation in English found that people typically wait about 239 ms after the other person finishes speaking before starting themselves – delay beyond this range begins to feel slow.

It is not caused by one single step but by the sum of many processes. Every voice agent follows this flow:

- Audio capture from the microphone.

- Speech-to-Text (STT) converts speech into text.

- The dialogue model (often an LLM) processes the text and generates a reply.

- Text-to-Speech (TTS) converts the reply back into audio.

- The audio is sent over a network and played back to the user.

When all of these steps are added together, the end-to-end latency decides whether the conversation feels natural. A total delay under one second usually feels smooth. A delay of two seconds or more makes the system sound robotic.

Why Is Latency So Critical in Voice AI?

Humans are sensitive to timing in conversations. In natural speech:

- A pause of 200 milliseconds feels instant.

- Around 500 milliseconds is still comfortable.

- Delays longer than one second feel slow.

- Beyond two seconds, people often repeat themselves or lose patience.

This has a direct impact on business outcomes:

- In customer support, longer calls increase handling costs.

- In sales, prospects hang up before hearing the pitch.

- In self-service, users give up and ask for a human agent.

This is why latency is not just a technical concern. It directly affects satisfaction, conversion, and cost.

Where Does Latency Come From?

The first step in solving latency is to know where it originates. A typical voice AI pipeline has four main stages that introduce delay.

1. Speech-to-Text (STT)

STT models listen to audio and output text. Latency here depends on whether you use batch or streaming. Batch waits for the entire sentence, which can add one to two seconds. Streaming produces text as the user speaks, cutting delay but requiring careful handling of partial results.

Other factors include model size, the way silence is detected, and the hardware used. Running models on GPUs or TPUs is faster than CPUs. Experiments with STT deployed on edge devices show latency dropping to tens of milliseconds, when audio capture, inference, and streaming are co-located.

2. Language Model or Dialogue Engine

Large models are powerful but slow to start producing output. The metric to watch here is time to first token. A general-purpose model might take close to a second before it produces its first word. The more context you pass in, the longer this takes.

Using smaller models, optimizing prompts, or applying retrieval to fetch only the needed data can help reduce this stage.

3. Text-to-Speech (TTS)

Here, the system must generate audio. The key factor is time to first byte – how long it takes before the first sound is ready. High-fidelity voices can take longer to synthesize. Chunking and streaming audio out as it is generated reduces the perceived wait.

4. Transport Layer

Even if your STT, model, and TTS are fast, the network can add delay. WebRTC calls usually add about 100 to 200 milliseconds. Calls over PSTN or SIP often add 400 to 600 milliseconds. If the servers are far from the caller, latency grows further.

5. Network Conditions

Unstable connections, packet loss, and mobile data all increase delay. Even the best pipeline cannot fully hide poor connectivity.

How Do You Measure Latency in Voice AI?

Reducing latency without measuring it is impossible. Teams need to instrument both the client and the server.

On the client side, record when the microphone starts sending audio and when playback of the reply begins. On the server side, break down the time spent in each step – STT, inference, TTS, and transport.

The main metrics to track are:

- Time to first token (from the language model).

- Time to first byte (from TTS).

- End-to-end latency (from user speech to AI audio reply).

For example, in a typical WebRTC call you may see:

| Component | Average latency (ms) | Notes |

| STT (streaming) | 250 | Depends on model and silence detection |

| LLM processing | 400–700 | Smaller models reduce this |

| TTS synthesis | 200 | Chunking helps reduce perceived delay |

| Transport | 150 | Higher over PSTN |

| Total | 1000–1300 | Natural if under 1000, slow if above 1500 |

This table shows that improving even one step can make the entire system feel more natural.

Reducing Latency in STT

Speech-to-Text is the first bottleneck. To improve it:

- Use streaming transcription instead of batch. This allows words to appear as soon as they are spoken.

- Adjust endpoint detection. Waiting for long silence increases lag. A tighter threshold speeds things up.

- Run STT models on accelerated hardware like GPUs or TPUs.

- Use domain-specific or smaller models where accuracy requirements allow.

Reducing Latency in Language Models

The dialogue engine or LLM is often the single largest contributor to delay. The main goal is to reduce time to the first token.

Practical steps include:

- Enable token streaming so that output starts as soon as possible.

- Choose smaller or distilled models for real-time conversations.

- Shorten prompts and remove unnecessary context to reduce processing time.

- Use retrieval techniques so that only the most relevant data is passed in, instead of the entire conversation history.

- Cache frequent responses such as greetings or confirmations.

These approaches allow the system to sound responsive without sacrificing accuracy.

Reducing Latency in TTS

Text-to-Speech can add another 200 to 400 milliseconds. The focus should be on starting playback quickly.

- Select TTS engines optimized for low latency rather than only high fidelity.

- Use chunked audio streaming, so playback starts with the first generated audio instead of waiting for the whole sentence.

- Cache common phrases or fallback responses. This avoids regeneration and allows instant playback.

Reducing Latency in the Transport Layer

Even with fast models, the network can ruin performance. Telephony adds unavoidable delay, but it can be minimized.

- Prefer WebRTC for lower overhead when possible.

- Use servers close to the caller’s region to reduce round-trip time.

- Optimize jitter buffer settings carefully; too much buffering adds lag, too little causes choppy audio.

- Monitor packet loss and apply adaptive recovery techniques.

Infrastructure Choices That Reduce Latency

Even with optimized STT, LLM, and TTS components, latency can creep in if the overall infrastructure is not designed carefully. The placement of servers, the type of hardware, and the way workloads are routed all matter.

- Edge computing: Running inference close to the user avoids long round-trip times. For example, if your users are in the US, hosting your STT and TTS in Asia will add hundreds of milliseconds of unavoidable latency.

- GPU inference nodes: Large models process faster when running on GPUs or TPUs. Inference on CPUs should be avoided except for fallback scenarios.

- Regional load balancing: Calls should be routed to the nearest available server. Global load balancers can direct traffic to the lowest-latency region automatically.

- Async processing: Wherever possible, use asynchronous processing so that steps do not block each other. For example, start sending partial STT output to the LLM while audio is still coming in.

- Avoid unnecessary transcoders: Every codec conversion adds delay. Use consistent codecs (for example, Opus or PCM) across the pipeline to minimize re-encoding.

Learn how deploying AI voicebots directly on SIP trunks reduces latency, improves reliability, and scales seamlessly across enterprise calls.

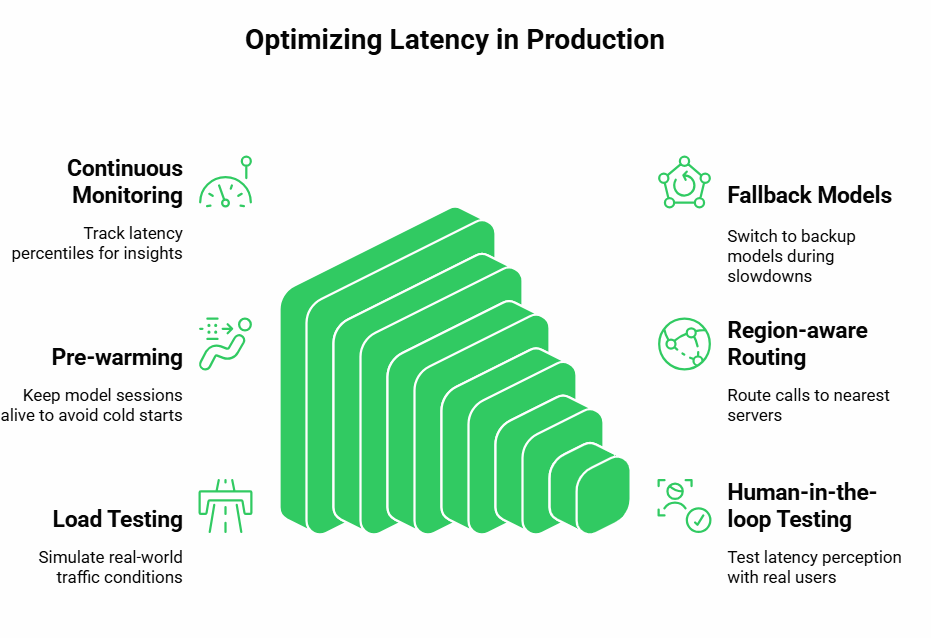

Best Practices for Keeping Latency Low in Production

Beyond architecture, there are ongoing operational practices that keep latency stable as systems scale.

- Continuous monitoring: Track p50, p90, and p99 latency for each component. Spikes at higher percentiles often reveal issues before average latency changes.

- Fallback models: If your main LLM or TTS provider slows down, fail over to a backup model. A slightly less accurate but faster model is better than silence.

- Pre-warming: Keep model sessions alive or pre-initialized. Cold starts can add over a second of delay.

- Region-aware routing: Route calls to servers nearest to the user’s location. Do not rely on a single central cluster.

- Load testing: Simulate real-world traffic volumes and network conditions. Latency often increases under scale even when components are fast in isolation.

- Human-in-the-loop testing: Automated benchmarks are useful, but real testers reveal when latency becomes noticeable. This ensures the system is optimized for perception, not just numbers.

Explore best practices for securing Voice AI and VoIP systems while maintaining low latency, reliability, and enterprise-grade compliance.

How Does FreJun Teler Help Lower Latency?

At this point, we’ve broken down every stage where latency can creep in – from speech recognition and model inference to audio synthesis and network transport. The toughest part to solve on your own is the telephony and VoIP layer. This is exactly where FreJun Teler comes in.

Teler is a global voice infrastructure platform built to keep conversations flowing in real time. By streaming audio directly from calls to your STT and back from your TTS output, Teler eliminates the buffering and round-trip delays that usually break natural interactions. The result: your users hear replies almost instantly, keeping the conversation smooth and human-like.

From a developer’s point of view, Teler is model-agnostic – you can connect any STT, any LLM, and any TTS of your choice. Teler takes care of the voice layer, with low-latency streaming, global reliability, and developer-first SDKs. This means you don’t have to build or maintain your own telephony stack.

For teams building production-grade systems, Teler becomes one of the top programmable voice AI APIs with low latency, giving you the reliability of a purpose-built voice API for developers while you focus on the AI logic itself.

Conclusion

Latency is the defining factor that decides whether a voice AI feels seamless or robotic. By carefully addressing each stage – STT, LLM, TTS, and transport – teams can reduce delay and deliver real-time, human-like interactions. Optimizations such as streaming transcription, token-level generation, chunked audio playback, and distributed infrastructure ensure conversations stay natural even at scale.

Frejun Teler solves the most complex piece – global voice transport – so you can focus on AI logic without worrying about telephony overhead. As one of the top programmable voice AI APIs with low latency, Teler is the voice API for developers building production-ready agents.

Ready to experience sub-second conversations?

Schedule a demo with Teler today.

FAQs –

1: What causes the most delay in voice AI conversations?

Most delay comes from STT, LLM inference, and TTS synthesis. Network transport adds extra milliseconds, especially on SIP or PSTN.

2: How fast should a voice AI system respond?

A good benchmark is under 800 milliseconds end-to-end. Anything beyond 1.5 seconds feels robotic and disrupts conversational flow.

3: Can smaller models really reduce latency without losing accuracy?

Yes. Distilled or domain-specific models produce faster token output, reducing delay while maintaining accuracy for focused use cases.

4: How does Teler help reduce latency in voice AI?

Teler optimizes telephony and VoIP transport with real-time streaming, ensuring low latency while developers focus on STT, LLM, and TTS.