Listen to a voice generated by ElevenLabs, and you’ll experience a paradigm shift. The era of robotic, monotone text-to-speech is over. What you hear is warmth, emotion, and inflection, a voice so lifelike it’s often indistinguishable from a human’s. For developers, this leap in quality is a game-changer, opening up a universe of possibilities for creating truly immersive and engaging voice applications.

But as any developer who has worked with real-time audio knows, a beautiful voice is only half the battle. You’ve integrated the perfect, custom-cloned voice into your AI agent. A user calls in, asks a question, and then… dead air. A painful, multi-second silence hangs in the balance while your application frantically works to generate and deliver a response. The illusion of a real, fluid conversation is instantly shattered.

This is the critical challenge that separates a cool demo from a production-grade voice application. This guide will explore the top use cases of ElevenLabs for developers and, more importantly, reveal the essential infrastructure you need to overcome the latency barrier and make these incredible voices truly conversational.

Table of contents

The Latency Problem with High-Quality TTS

The very thing that makes ElevenLabs’ voices so good—their complexity and quality—can also be a source of latency. When building a real-time voice app (like a customer service bot), the typical, slow workflow looks like this:

- A user speaks to your application.

- Your app captures the audio and sends it to a Speech-to-Text (STT) engine.

- The resulting text is sent to your Large Language Model (LLM) to determine a response.

- Your LLM’s text response is sent to the ElevenLabs API.

- The Bottleneck: The ElevenLabs server processes the text and generates a high-quality audio file.

- The Second Bottleneck: Your application must wait to download this entire audio file.

- Finally, your application plays the downloaded file back to the user.

Each of these steps adds milliseconds, but steps 5 and 6 can add seconds. This “generate, download, play” method is a conversation killer. It’s simply too slow for a natural, back-and-forth dialogue.

Also Read: What Are the Best Retell AI Alternatives in 2025?

Why Does Voice Infrastructure is Non-Negotiable?

So, how do you use the stunning quality of ElevenLabs for developers without the crippling latency? The answer is not a better TTS engine; it’s a better delivery system. You need a specialized voice infrastructure that can bypass the “download and play” bottleneck.

This is the exact problem FreJun AI was built to solve. We are not a TTS provider; we are the foundational layer that makes real-time voice possible.

Our Philosophy: “We handle the complex voice infrastructure so you can focus on building your AI.”

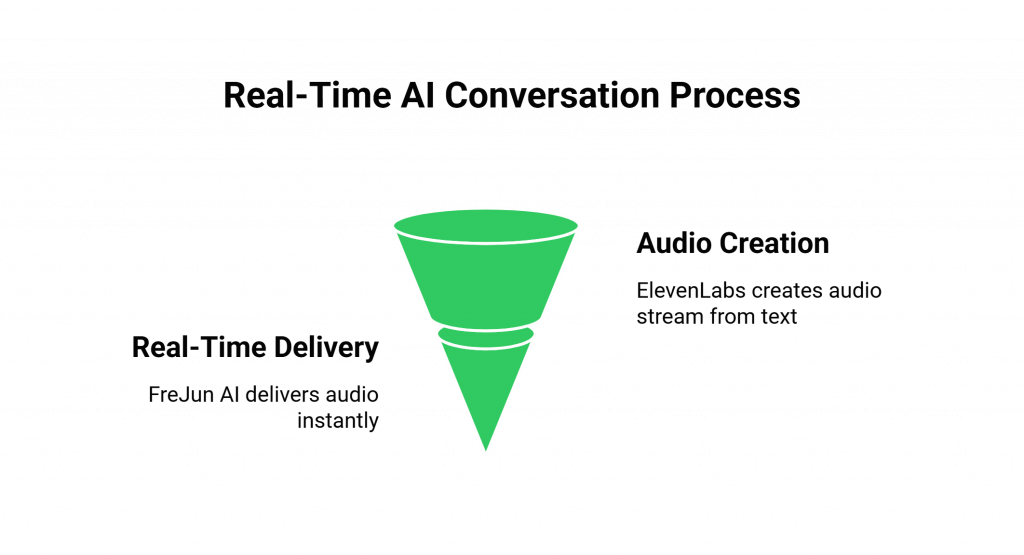

FreJun AI acts as a high-performance “nervous system” for your voice agent. Instead of the slow, traditional method, our platform enables a real-time streaming workflow:

- Your LLM generates the text response.

- You send this text to the ElevenLabs API, which supports audio streaming.

- The FreJun AI Solution: Our platform takes the audio stream directly from ElevenLabs and streams it back to the user over the live phone call in real time, with ultra-low latency.

There is no waiting for a file to be generated. No download delay. The conversation just flows. This infrastructure is the key to unlocking the true potential of ElevenLabs for developers in any real-time application.

Top Use Cases of ElevenLabs for Developers

When you combine ElevenLabs’ best-in-class voices with FreJun AI’s real-time delivery, a new class of powerful applications becomes possible.

Hyper-Realistic, Real-Time Voice Agents

The Challenge: Traditional Interactive Voice Response (IVR) systems and customer service bots are universally disliked. Their robotic voices and slow response times lead to customer frustration and high call abandonment rates.

The Solution: Use ElevenLabs to create a unique, warm, and empathetic voice for your brand. You can even clone the voice of your most trusted brand ambassador or best-performing support agent. Then, deploy this agent on FreJun AI’s infrastructure. The result is an AI agent that can handle inbound queries, answer questions, and route calls with a voice that is not only pleasant to listen to but also responds instantly, just like a human.

- Real-World Impact: An e-commerce company can build a 24/7 support agent that handles order status inquiries with a calm, reassuring voice, dramatically improving customer satisfaction and reducing the load on human agents.

Also Read: Top 5 Deepgram Alternatives Every Developer Should Try in 2025

Dynamic and Personalized Outbound Calling

The Challenge: Automated outbound calls (robocalls) are notoriously ineffective because they are generic and impersonal. They sound pre-recorded and lead to immediate hang-ups.

The Solution: This is a perfect use case for ElevenLabs for developers. You can use their API to generate dynamic audio on the fly, inserting personalized details like names, appointment times, or specific product information into the conversation. Powered by FreJun AI’s outbound calling capabilities, your agent can deliver these messages in a way that feels like a one-on-one conversation, not a broadcast.

- Real-World Impact: A healthcare provider can deploy a voice agent with a friendly, professional voice to make appointment reminder calls. The agent can even handle simple interactions like confirming or rescheduling, all in a single, fluid conversation.

Immersive AI Characters for Gaming and Interactive Experiences

The Challenge: Creating high-quality voice acting for non-player characters (NPCs) in video games or for interactive storytelling is incredibly expensive and time-consuming, and it’s impossible to account for every possible user interaction with pre-recorded lines.

The Solution: With ElevenLabs’ voice design and cloning features, developers can create a diverse cast of unique and compelling characters. By integrating these voices into an application powered by an LLM and FreJun AI’s infrastructure, you can allow users to have live, unscripted phone conversations with these AI characters.

- Real-World Impact: A game studio could create a promotion where players can “call” a character from the game. The AI character, speaking with its unique voice, can respond to questions, share lore, and create a deeply immersive experience that extends beyond the game itself.

Scalable, High-Quality Audio Content Generation

The Challenge: Manually recording audio versions of blog posts, news articles, or internal training documents is not scalable and can be prohibitively expensive.

The Solution: This is a classic use case for the ElevenLabs for developers API. You can create an automated workflow that instantly converts any new text-based content into a natural-sounding audio file. This makes your content more accessible to a wider audience. To make it interactive, you could even deploy a voice agent using this same voice that allows users to call in and ask questions about the content, with an LLM providing answers in real time.

- Real-World Impact: A media company can offer an audio version of every article on its website, read by a consistent, authoritative AI voice. This adds significant value for subscribers who prefer to listen to content while commuting or multitasking.

Also Read: Best Play AI Alternatives in 2025 for Startups & Enterprises

The Technical Workflow: How It All Fits Together

For a developer, the integration of these best-in-class components is surprisingly straightforward with the right foundation:

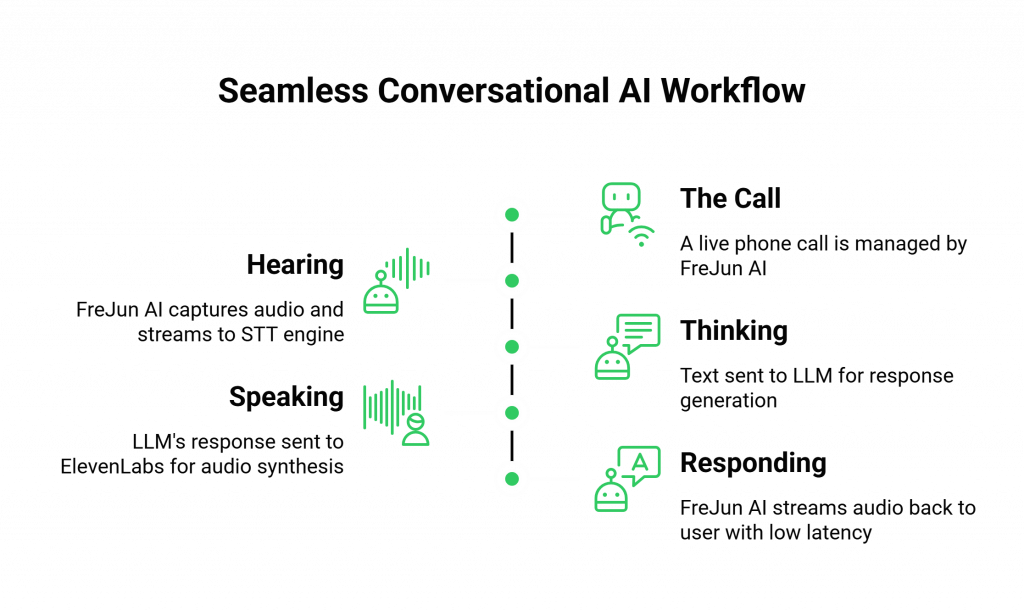

- The Call: A live phone call is managed by the FreJun AI platform.

- Hearing: FreJun AI captures the user’s audio and streams it in real time to your chosen STT engine (e.g., Deepgram, OpenAI Whisper).

- Thinking: The resulting text is sent to your LLM (e.g., GPT-4, Llama 3) to generate a text response.

- Speaking: Your LLM’s text response is sent to the ElevenLabs for developers streaming API.

- Responding: FreJun AI takes the resulting audio stream directly from ElevenLabs and streams it back to the user over the call with ultra-low latency.

This entire loop completes in milliseconds, creating a seamless and engaging conversational experience.

Conclusion: A World-Class Voice Deserves a World-Class Delivery

The realism and emotional depth of the voices from ElevenLabs for developers have opened the door to a new era of human-computer interaction. However, in the world of real-time voice applications, the quality of a voice is ultimately judged by its delivery. A delayed response, no matter how beautifully spoken, will always feel artificial.

By separating the AI “brain” and “voice” from the “nervous system,” you can build a truly superior application. By combining the unparalleled vocal quality of ElevenLabs with the robust, low-latency voice infrastructure of FreJun AI, you are not just building another voice bot.

You are creating a conversational experience that is intelligent, emotive, and instantly responsive, the kind that will captivate users and define the future of voice AI.

Also Read: How Hosted PBX in Qatar Is Powering Digital Transformation

Frequently Asked Questions (FAQs)

ElevenLabs’ API is a specialized Text-to-Speech (TTS) service that creates high-quality audio from text. A voice infrastructure platform like FreJun AI handles the entire communication layer, managing the live phone call, handling telephony protocols (SIP/PSTN), and streaming audio in real time which allows you to use a service like ElevenLabs in a live, interactive conversation.

Latency is the delay between a user finishing their sentence and the AI agent starting its response. High latency creates unnatural pauses, making the conversation feel clumsy and frustrating for the user. For a conversation to feel natural, this delay should be less than a second.

No. FreJun AI is completely model-agnostic. Our platform is designed to work with any TTS, STT, or LLM provider. This gives you the freedom to choose the absolute best models for your specific use case.

FreJun AI provides developer-friendly SDKs and a robust API that can be integrated with any modern backend programming language, including Python, Node.js, Go, and Java, allowing you to build in the environment you are most comfortable with.