We have all been there: explaining a complex issue to a customer support agent for the third time, only to be transferred to another department where we have to start all over again. This frustrating cycle is a hallmark of support systems with short-term memory. For years, automation has promised to solve this, but early chatbots and voice bots suffered from the same fundamental flaw: a tiny context window. They could handle a simple question but would forget the conversation’s history after just a few turns.

Table of contents

- The Production Wall: Where Ambitious Voice AI Projects Fail

- The Solution: A Photographic Memory with an Enterprise-Grade Voice

- How to Build a Voice Bot Using MiniMax-Text-01?

- DIY Infrastructure vs. FreJun: A Head-to-Head Comparison

- Best Practices for Optimizing Your MiniMax-Text-01 Voice Bot

- From Long Context to Long-Term Value

- Frequently Asked Questions (FAQs)

This limitation has relegated most AI support to simple, tier-1 tasks, leaving human agents to handle any issue requiring deep context or reference to long documents. To truly automate complex support, businesses need an AI with a massive, infallible memory. This is where a new class of large language models, designed for enormous context windows, is changing the game. Models like MiniMax-Text-01 are not just conversational; they are capable of remembering and reasoning over vast amounts of information, paving the way for a new generation of genuinely helpful voice agents.

The Production Wall: Where Ambitious Voice AI Projects Fail

The excitement around a model with a massive 4-million-token context window is immense. Developers can build impressive demos where the AI reads an entire technical manual to solve a problem. But a huge chasm separates this text-based demo from a scalable, production-grade voice bot that can handle live phone calls from real customers. This is the production wall, and it’s where most voice AI projects fail.

When a business tries to deploy their advanced AI over the phone, they run headfirst into daunting technical challenges:

- Hardware and Setup Complexity: Running a massive model like MiniMax-Text-01 requires a significant investment in multi-GPU servers and the deep expertise to configure them with the right drivers, quantization, and device mapping.

- Crippling Latency: The delay between a caller speaking, the AI processing a huge amount of context, and the bot responding is the number one killer of a natural conversation. High latency leads to awkward pauses and a broken user experience.

- Integration Nightmare: A complete voice solution requires stitching together multiple real-time services: Automatic Speech Recognition (ASR), the MiniMax-Text-01 model, and a Text-to-Speech (TTS) engine. Orchestrating this pipeline seamlessly is a major engineering hurdle.

This infrastructure problem is the primary reason promising voice AI projects stall, consuming valuable resources on “plumbing” instead of perfecting the AI’s reasoning capabilities.

The Solution: A Photographic Memory with an Enterprise-Grade Voice

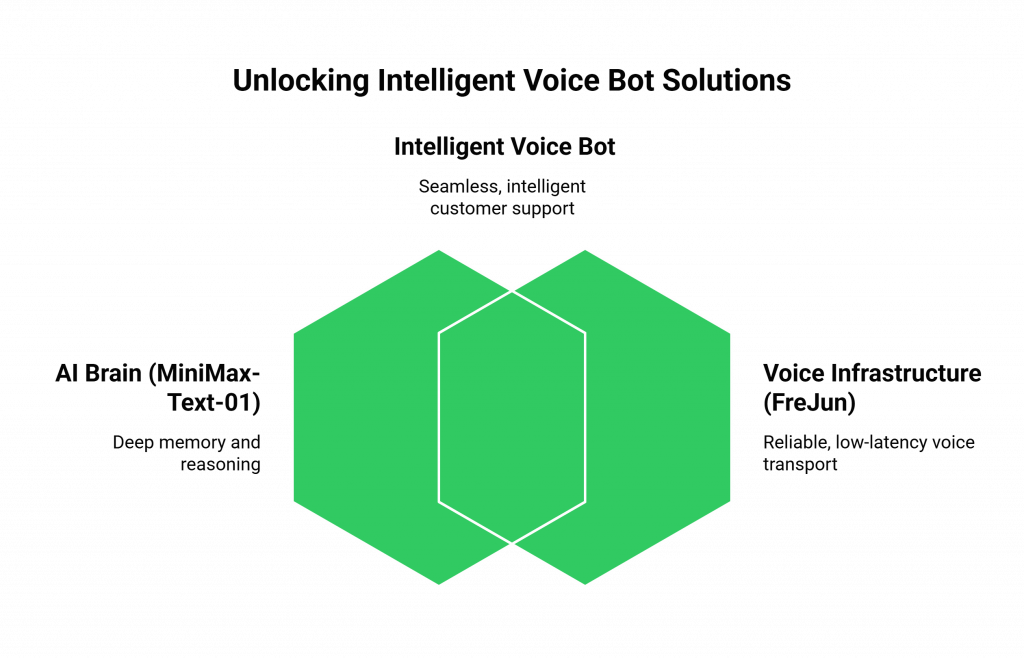

To build a voice bot that can solve complex problems, you need to combine an AI with a massive memory with a robust, low-latency “voice.” This is where the synergy between MiniMax-Text-01 and FreJun’s voice infrastructure creates a powerful, production-ready solution.

- The Brain (MiniMax-Text-01): MiniMax-Text-01, a powerful LLM with a 4-million-token context window, uniquely handles complex customer support. It can “remember” an entire conversation, no matter how long, and reference lengthy documents in real-time.

- The Voice (FreJun): FreJun handles the complex voice infrastructure so you can focus on building your AI. Our platform is the critical transport layer that connects your MiniMax-Text-01 application to your customers over any telephone line. We ensures the speed and clarity that a real-time Voice bot using MiniMax-Text-01 demands.

By pairing MiniMax’s memory with FreJun’s reliability, you can bypass the biggest hurdles in voice bot development and move directly to creating a support experience that is genuinely intelligent.

How to Build a Voice Bot Using MiniMax-Text-01?

While many tutorials focus on local setup, a real business application starts with a phone call. This guide outlines the production-ready pipeline for creating a Voice bot using MiniMax-Text-01.

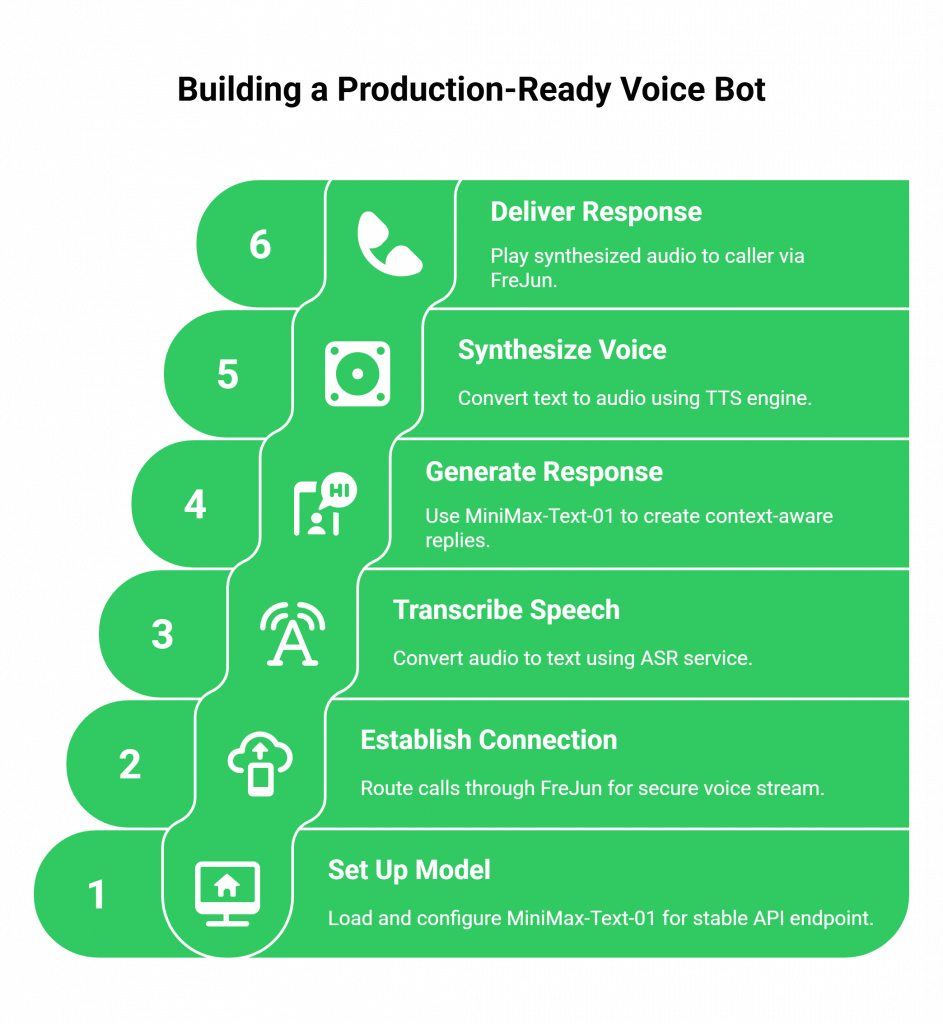

Step 1: Set Up Your MiniMax-Text-01 Model

Before your bot can think, its brain needs to be running.

- How it Works: Use the Hugging Face Transformers library to load the MiniMax-Text-01 model and tokenizer, ensuring trust_remote_code=True. Configure it with quantization (e.g., int8) and multi-GPU device mapping for performance. This gives you a stable API endpoint for your bot’s logic.

Step 2: Establish the Call Connection with FreJun

This is where the real-world interaction begins.

- How it Works: A customer dials your business phone number, which is routed through FreJun’s platform. Our API establishes the connection and immediately begins providing your application with a secure, low-latency stream of the caller’s voice.

Step 3: Transcribe User Speech with ASR

The raw audio stream from FreJun must be converted into text.

- How it Works: You stream the audio from FreJun to your chosen ASR service. The ASR transcribes the speech in real time and returns the text to your application server.

Step 4: Generate a Response with MiniMax-Text-01

The transcribed text is fed to your MiniMax-Text-01 model.

- How it Works: Your application takes the transcribed text, appends it to the ongoing conversation history (which can be very long!), and sends it all as a prompt to your MiniMax-Text-01 API endpoint. The model’s massive context window allows it to process the entire history for a perfectly context-aware reply.

Step 5: Synthesize the Voice Response with TTS

The text response from MiniMax-Text-01 must be converted back into audio.

- How it Works: The generated text is passed to your chosen TTS engine. MiniMax offers its own AI TTS and voice cloning services, or you can use other popular platforms like ElevenLabs. Using a streaming TTS service is critical here.

Step 6: Deliver the Response Instantly via FreJun

The final, crucial step is playing the bot’s voice to the caller.

- How it Works: You pipe the synthesized audio stream from your TTS service directly to the FreJun API. Our platform plays this audio to the caller over the phone line with minimal delay, completing the conversational loop of your Voice bot using MiniMax-Text-01.

DIY Infrastructure vs. FreJun: A Head-to-Head Comparison

When building a Voice bot using MiniMax-Text-01, you face a critical build-vs-buy decision for your voice infrastructure. The choice will define your project’s speed, cost, and ultimate success.

| Feature / Aspect | DIY Telephony Infrastructure | FreJun’s Voice Platform |

| Primary Focus | 80% of your resources are spent on complex telephony, multi-GPU hardware management, and latency optimization. | 100% of your resources are focused on building and refining the AI reasoning and conversational experience. |

| Time to Market | Extremely slow (months or years). Requires hiring a team with rare telecom and hardware expertise. | Extremely fast (days to weeks). Our developer-first APIs and SDKs abstract away all the complexity. |

| Latency | A constant and difficult battle to minimize the conversational delays that make bots feel robotic. | Engineered for low latency. Our entire stack is optimized for the demands of real-time voice AI. |

| Scalability & Reliability | Requires massive capital investment in redundant hardware, carrier contracts, and 24/7 monitoring. | Built-in. Our platform is built on a resilient, high-availability infrastructure designed to scale with your business. |

| Maintenance | You are responsible for managing hardware, software dependencies, carrier relationships, and troubleshooting complex failures. | We provide guaranteed uptime, enterprise-grade security, and dedicated integration support from our team of experts. |

Best Practices for Optimizing Your MiniMax-Text-01 Voice Bot

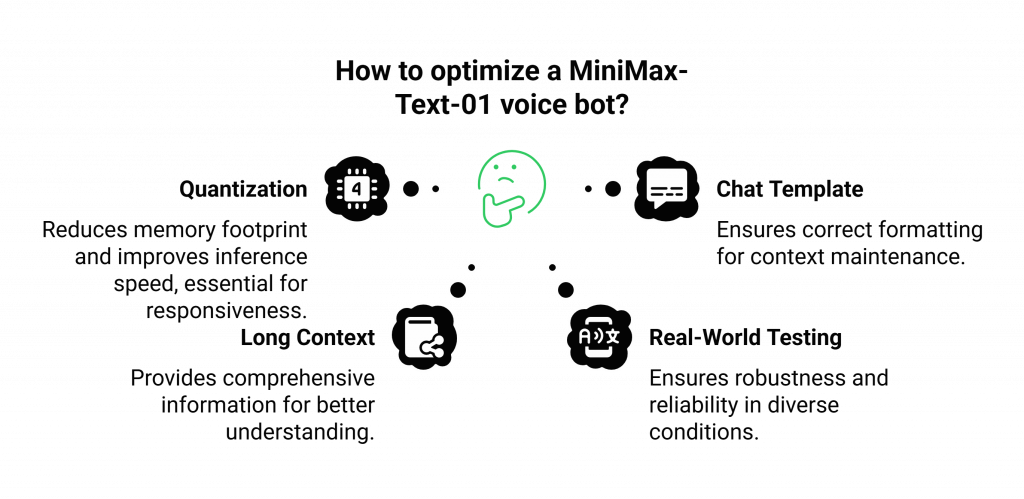

Building the pipeline is the first step. To create a truly effective Voice bot using MiniMax-Text-01, follow these best practices:

- Leverage Quantization: When deploying the model, apply quantization (int8 is recommended). This significantly reduces the memory footprint and can improve inference speed, which is critical for a responsive voice bot.

- Master the Chat Template: Correctly formatting your conversational history using the model’s specific chat template is non-negotiable. This is essential for the model to understand the flow of the conversation and maintain context.

- Embrace the Long Context: Don’t hold back. Provide the model with as much relevant information as possible in the prompt—the full conversation history, user manuals, previous support tickets, etc. This is the model’s key strength.

- Test in Real-World Conditions: Move beyond testing with clean audio. Use real phone calls and test with diverse accents, background noise, and varying connection quality to ensure your Voice bot using MiniMax-Text-01 is robust and reliable.

Pro Tip: For an even more personalized experience, explore MiniMax’s voice cloning and TTS features. This allows you to create a unique, natural-sounding voice for your brand’s AI agent, further enhancing the customer experience of your Voice bot using MiniMax-Text-01.

From Long Context to Long-Term Value

The availability of models like MiniMax-Text-01, with its massive context window, marks a significant milestone in AI development. The ability to create automated agents that can remember and reason over vast amounts of information unlocks a new tier of customer support automation.

By building on FreJun’s infrastructure, you make a strategic decision to bypass the most significant risks and costs associated with voice AI development. You can focus your valuable resources on what you do best: creating an intelligent, context-aware, and valuable customer experience with your custom Voice bot using MiniMax-Text-01. Let us handle the complexities of telephony, so you can build the future of your business communications with confidence.

Further Reading – Voice Conversational AI: Real-Time Implementation Guide

Frequently Asked Questions (FAQs)

MiniMax-Text-01 is a powerful large language model that stands out due to its extremely long context window (up to 4 million tokens). This makes it exceptionally good at handling long, complex conversations and referencing large documents, which is ideal for advanced customer support.

No. FreJun is the specialized voice infrastructure layer. Our platform is model-agnostic, meaning you bring your own AI model (like MiniMax-Text-01), Automatic Speech Recognition (ASR), and Text-to-Speech (TTS) services. This gives you complete control and flexibility.

A context window is the amount of text the model can “remember” from the current conversation. A long context window, like that of MiniMax-Text-01, allows the voice bot to consider a vast amount of information (like a full user manual or a long chat history) before answering, leading to more accurate and informed responses.

Low latency is essential for a natural conversation. Long delays between a user speaking and the bot replying create awkward silences and lead to users interrupting the bot, causing a frustrating and ineffective experience.