For years, automated customer support has been a source of frustration for customers and a compromise for businesses. We have all experienced the endless loops of rigid IVR systems and dealt with chatbots giving outdated, irrelevant information. These systems fail because they rely on static scripts and lack real-time understanding of the world. They can’t keep up with breaking news, trending topics, or the dynamic nature of modern customer queries.

Table of contents

- The Production Wall: Why Your Voice AI Project Fail s

- The Solution: A World-Class Brain with an Enterprise-Grade Voice

- How to Build an xAI Grok Voice Bot for Customer Support? A Production Guide

- DIY Infrastructure vs. FreJun: A Head-to-Head Comparison

- Best Practices for Deploying Your Voice Bot

- Final Thoughts: From AI Model to Tangible Business Asset

- Frequently Asked Questions (FAQs)

The demand today is for something more. Customers expect support that is not only intelligent but also current, witty, and context-aware. This is where a new generation of AI models like xAI’s Grok is set to redefine the industry. With its ability to pull real-time information from the X platform, Grok offers a level of immediacy that traditional models cannot match. The challenge, however, is not just finding a smart AI, it’s giving that AI a clear, reliable voice.

The Production Wall: Why Your Voice AI Project Fails

The excitement around powerful models like Grok often leads development teams down a promising but perilous path. They build impressive demos where a bot, running on a local machine, interacts flawlessly through a computer microphone. But the moment they try to scale this demo into a production system that can handle real phone calls, they hit a wall.

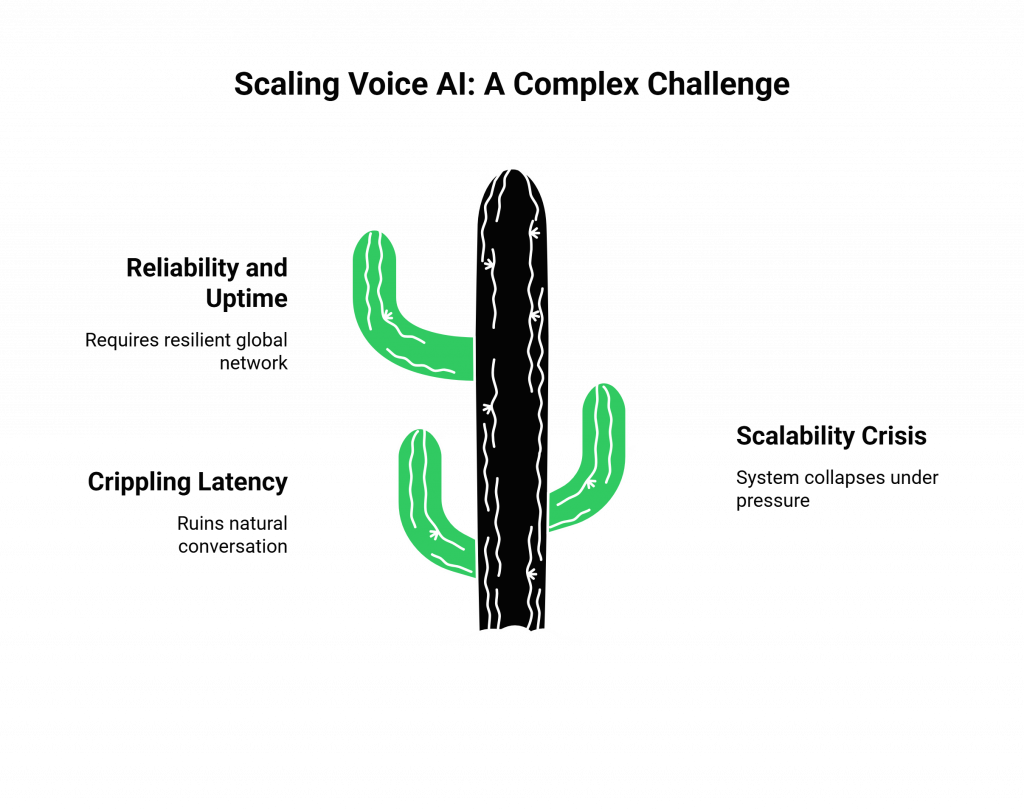

This production wall is built from the immense complexity of voice infrastructure. Connecting an AI to the global telephone network in real-time is a monumental engineering challenge, fraught with obstacles:

- Crippling Latency: The delay between a customer speaking and the bot responding is the number one killer of a natural conversation. High latency creates awkward pauses, leading to interruptions and a fundamentally broken experience.

- The Scalability Crisis: A system that works for one call will collapse under the pressure of hundreds of concurrent calls during peak business hours.

- Reliability and Uptime: Real-time voice requires a resilient, geographically distributed network to ensure high availability and crystal-clear audio quality, a system that is incredibly expensive and complex to build and maintain.

This infrastructure problem is the primary reason why so many promising voice AI projects fail, consuming valuable time and resources on “plumbing” instead of perfecting the AI.

The Solution: A World-Class Brain with an Enterprise-Grade Voice

To build a voice bot that delivers real business value, you need to combine a state-of-the-art AI “brain” with a robust, low-latency “voice.” This is where the synergy between xAI’s Grok and FreJun’s voice infrastructure creates a powerful, production-ready solution.

- The Brain (xAI Grok): Grok is an advanced AI model that delivers quick, conversational responses and uniquely integrates real-time data from the X platform. It’s the ideal engine for an up-to-the-minute customer support agent.

- The Voice (FreJun): FreJun handles the complex voice infrastructure so you can focus on building your AI. Our platform is the critical transport layer that connects your Grok application to your customers over any telephone line. We architect our system for the speed and clarity that a real-time xAI Grok voice bot for customer support demands.

By pairing Grok’s intelligence with FreJun’s infrastructure, you can bypass the biggest hurdles in voice bot development and move directly to creating exceptional customer experiences.

How to Build an xAI Grok Voice Bot for Customer Support? A Production Guide

Building a functional voice bot requires a pipeline of specialized technologies working in concert. Here is a high-level guide to building a production-ready system for automating calls.

Step 1: Set Up Your Grok API Access

Before your bot can think, you need to connect to its brain.

- How it Works: Register for a developer account on the xAI Developer Portal to obtain your API keys. You will need to handle authentication by passing a Bearer token in the header of your API requests.

Step 2: Capture Real-Time Call Audio with FreJun

Every real-world interaction begins with a phone call.

- How it Works: The call is routed through the FreJun platform. Our API securely captures the caller’s voice and provides your application with a low-latency audio stream. This is the essential first step for any production-grade voice application.

Step 3: Transcribe Speech to Text (ASR)

The raw audio stream from FreJun needs to be converted into text.

- How it Works: You stream the audio to your chosen Automatic Speech Recognition (ASR) service. The resulting transcription is then passed to your AI application.

Step 4: Generate a Response with the Grok API

This is where the core AI logic happens.

- How it Works: Your application sends the transcribed text, along with the maintained conversation history, to the Grok API /completions endpoint. You’ll specify the model (e.g., ‘grok-1’) and can control the output with parameters like temperature and max_tokens.

Step 5: Convert Text back to Speech (TTS)

The system must transform Grok’s text response into a human-like voice.

- How it Works: The system passes the generated text to a Text-to-Speech (TTS) engine like ElevenLabs or Google Text-to-Speech. Using a streaming TTS service is crucial for minimizing response latency.

Step 6: Deliver the Voice Response Instantly via FreJun

The final step is to play the bot’s audio back to the caller.

- How it Works: The audio from your TTS service is piped directly to the FreJun API. Our platform streams this audio back to the caller over the call with minimal delay, completing the conversational loop for a fluid, interactive xAI Grok voice bot for customer support.

DIY Infrastructure vs. FreJun: A Head-to-Head Comparison

When building an xAI Grok voice bot for customer support, you have a fundamental choice: build the telephony infrastructure yourself or leverage a specialized platform. The difference in focus, cost, and speed is stark.

| Feature / Aspect | DIY / Native Approach | FreJun’s Solution |

| Primary Focus | 80% of resources are spent on “plumbing”: managing SIP trunks, carriers, and latency. | 100% of resources are focused on what matters: building and refining the AI experience. |

| Time to Market | Extremely slow (months to years). Requires hiring a team with specialized telecom engineering skills. | Extremely fast (days to weeks). Our developer-first APIs and SDKs abstract away all the complexity. |

| Latency | A constant and difficult engineering challenge that leads to awkward, robotic conversations. | Engineered for low latency. Our entire stack is optimized for the demands of real-time conversational AI. |

| Scalability & Reliability | Requires massive capital investment in redundant, geo-distributed hardware and 24/7 monitoring. | Built-in. Our platform is built on a resilient, high-availability infrastructure designed to scale with your business. |

| Flexibility | Rigid. You are locked into the specific carriers and technologies you initially build, making it hard to innovate. | Completely model-agnostic. Bring your own Grok API, ASR, and TTS. Swap components as better tech emerges. |

Best Practices for Deploying Your Voice Bot

Creating a powerful xAI Grok voice bot for customer support is just the start. To ensure it is effective and reliable in production, follow these best practices:

- Optimize Your Prompts: Use detailed system prompts and clear instructions to guide the model’s tone, personality, and response style. This is essential for maintaining brand consistency.

- Manage API Parameters: Thoughtfully use parameters like temperature and top_p to balance between creative, witty responses and factual accuracy, depending on the use case.

- Implement Robust Error Handling: Your code must gracefully handle API failures, rate limits, or timeouts. Implement a retry logic to ensure your bot remains responsive even with transient network issues.

- Maintain Dialogue Context: Meticulously manage the multi-turn conversation history sent with each API call. This is the foundation of Grok’s ability to have a coherent, natural conversation.

Final Thoughts: From AI Model to Tangible Business Asset

The emergence of models like xAI’s Grok represents a paradigm shift for business communication. The ability to deploy genuinely intelligent, real-time conversational AI is no longer a futuristic concept, it’s a practical reality and a powerful competitive advantage. A well-designed xAI Grok voice bot for customer support can do more than just answer questions; it can enhance customer satisfaction, provide up-to-the-minute information, and drive brand engagement.

By building on FreJun’s infrastructure, you are making a strategic decision to focus on value, not on plumbing. You are free to harness the full power of one of the world’s most unique AI models, confident that its voice will be clear, reliable, and ready to scale globally. Stop wrestling with telephony complexity and start building the future of your customer experience. This is how you turn a powerful AI model into a tangible business asset.

Also Read: Enterprise Virtual Phone Solutions for B2B Communication in Israel

Frequently Asked Questions (FAQs)

Grok is an advanced AI model from xAI, designed for conversational interactions. Its key feature is its ability to access real-time information from the X (formerly Twitter) platform, allowing it to provide witty and current responses.

No. FreJun is a specialized voice transport layer. We provide the real-time audio streaming and call management infrastructure. Our platform is model-agnostic, meaning you bring your own AI (like Grok), ASR, and TTS services. This gives you maximum flexibility and control.

Low latency is critical for creating a natural conversational flow. Long delays between a user speaking and the bot responding lead to awkward pauses and interruptions, making the experience feel robotic and frustrating. FreJun is engineered to minimize this latency.

You can get API keys by signing up for an X Premium+ subscription and then registering for a developer account on the xAI Developer Portal.

Yes. A key to enabling multi-turn conversations is to maintain the conversation history. With each new user input, your application should send the entire chat history back to the Grok API, allowing the model to have full context for its next response.