The world of conversational AI is undergoing a radical transformation, and at the heart of this revolution is the power of open-weight models. For developers, the ability to build AI voice agents using Mistral Medium 3 represents a new era of freedom and control. No longer are you locked into a single proprietary ecosystem. You can now handpick your entire AI stack, creating a truly custom “brain” that is perfectly tailored to your business needs, balancing immense power with remarkable efficiency.

Table of contents

- The New Era of AI: The Freedom of Open-Weight Models

- The Hidden Challenge: A Brilliant Bot Trapped in Your Data Center

- FreJun: The Voice Infrastructure Layer for Your Custom AI Agent

- DIY Telephony vs. A FreJun-Powered Agent: A Strategic Comparison

- How to Build AI Voice Agents That Can Answer the Phone?

- Best Practices for a Flawless Implementation

- Final Thoughts

- Frequently Asked Questions (FAQ)

This freedom is intoxicating. You can pair the advanced reasoning of Mistral Medium 3 with best-in-class speech recognition and synthesis engines to create a unique and powerful conversational experience. However, after the initial success of building this intelligent core, many teams run into a formidable and often project-killing roadblock. Their brilliant, custom-built creation is trapped, unable to connect to the most critical channel for any real-world business application: the telephone network.

The New Era of AI: The Freedom of Open-Weight Models

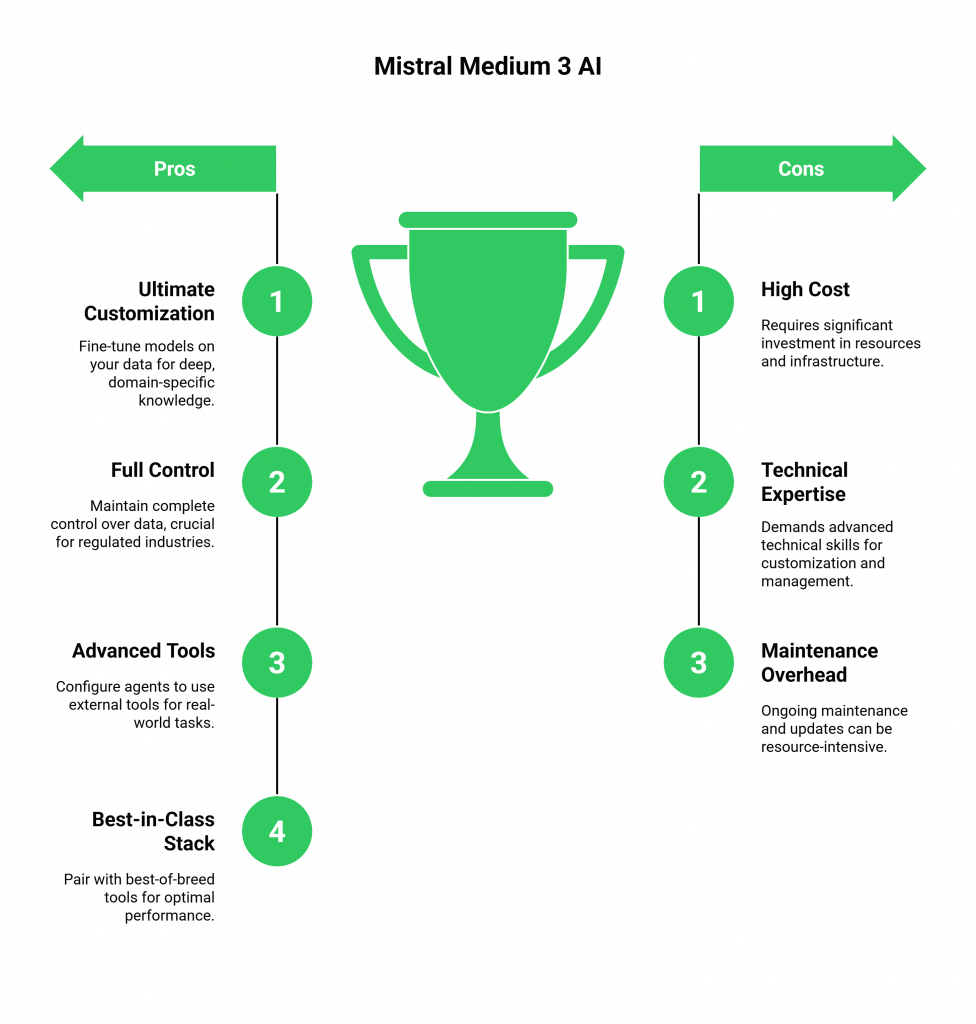

The rise of powerful, accessible models like Mistral Medium 3 has fundamentally changed the game. When you build AI voice agents using Mistral Medium 3, you gain a unique set of advantages:

- Ultimate Customization: You can fine-tune Mistral models on your own data, giving them deep, domain-specific knowledge that a generic, off-the-shelf model could never achieve.

- Full Control and Privacy: By hosting the model yourself, you maintain complete control over your data, a critical consideration for businesses in regulated industries.

- Advanced Tool Use: Mistral Medium 3 agents can be configured to use external tools, such as web search, code execution, or custom API functions. This allows your agent to perform real-world tasks and provide up-to-the-minute information.

- A Best-in-Class Stack: You have the freedom to assemble a “dream team” of components, pairing Mistral Medium 3’s powerful NLU with other best-of-breed open-source or commercial tools for Automatic Speech Recognition (ASR) and Text-to-Speech (TTS).

This flexibility allows developers to build a truly differentiated AI brain, tailored to their specific needs.

The Hidden Challenge: A Brilliant Bot Trapped in Your Data Center

You have successfully built your custom AI stack. You’ve set up your Mistral API key, initialized your client, and your Mistral Medium 3-powered agent is intelligent, context-aware, and works perfectly in your development environment. Now, it’s time to put it to work. Your business needs it to handle the customer support hotline, qualify sales leads, or automate appointment booking over the phone.

This is where the project grinds to a halt. The problem is that the entire ecosystem of tools used to build your bot, Mistral Medium 3, Whisper for ASR, a custom TTS engine, is designed to process data, not to manage live phone calls. To connect your custom-built agent to the Public Switched Telephone Network (PSTN), you would have to build a highly specialized and complex voice infrastructure from scratch. This involves solving a host of non-trivial engineering challenges:

- Telephony Protocols: Managing SIP (Session Initiation Protocol) trunks and carrier relationships.

- Real-Time Media Servers: Building and maintaining dedicated servers to handle raw audio streams from thousands of concurrent calls.

- Call Control and State Management: Architecting a system to manage the entire lifecycle of every call, from ringing and connecting to holding and terminating.

- Network Resilience: Engineering solutions to mitigate the jitter, packet loss, and latency inherent in voice networks that can destroy the quality of a real-time conversation.

Suddenly, your AI project has become a grueling telecom engineering project, pulling your team away from its core mission of building an intelligent and effective bot. The freedom you gained by using an open-weight model is lost in the rigid, complex world of telephony.

FreJun: The Voice Infrastructure Layer for Your Custom AI Agent

This is the exact problem FreJun was built to solve. We are not another AI model or a closed ecosystem. We are the specialized voice infrastructure platform that provides the missing layer, allowing you to connect your custom agent to the telephone network with a simple, powerful API. FreJun is the key to letting you build AI voice agents using Mistral Medium 3 that are both powerful and reachable.

We handle all the complexities of telephony, so you can focus on perfecting your unique AI stack.

- We are AI-Agnostic: You bring your own “brain.” FreJun integrates seamlessly with any backend, allowing you to use your custom Mistral Medium 3, ASR, and TTS stack.

- We Manage the Voice Transport: We handle the phone numbers, the SIP trunks, the global media servers, and the low-latency audio streaming.

- We are Developer-First: Our platform makes a live phone call look like just another WebSocket connection to your application, abstracting away all the underlying telecom complexity.

With FreJun, you can maintain the full freedom of a custom AI stack while leveraging the reliability and scalability of an enterprise-grade voice network.

DIY Telephony vs. A FreJun-Powered Agent: A Strategic Comparison

| Feature | The Full DIY Approach (Including Telephony) | Your Custom Mistral Medium 3 Stack + FreJun |

| Infrastructure Management | You build, maintain, and scale your own voice servers, SIP trunks, and network protocols. | Fully managed. FreJun handles all telephony, streaming, and server infrastructure. |

| Scalability | Extremely difficult and costly to build a globally distributed, high-concurrency system. | Built-in. Our platform elastically scales to handle any number of concurrent calls on demand. |

| Development Time | Months, or even years, to build a stable, production-ready telephony system. | Weeks. Launch your globally scalable voice bot in a fraction of the time. |

| Developer Focus | Divided 50/50 between building the AI and wrestling with low-level network engineering. | 100% focused on building the best possible conversational experience. |

| Maintenance & Cost | Massive capital expenditure and ongoing operational costs for servers, bandwidth, and a specialized DevOps team. | Predictable, usage-based pricing with no upfront capital expenditure and zero infrastructure maintenance. |

How to Build AI Voice Agents That Can Answer the Phone?

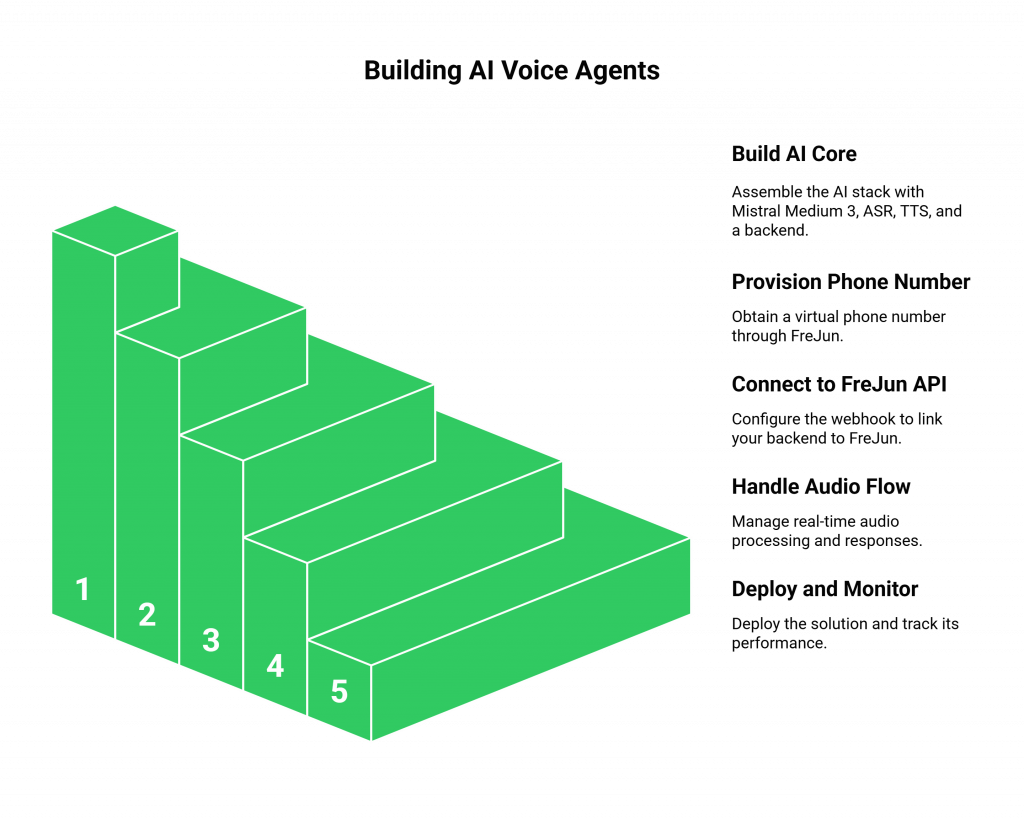

This step-by-step guide outlines the modern, efficient process for taking your custom-built Mistral Medium 3-powered agent from your local machine to a production-ready telephony deployment.

Step 1: Build Your AI Core

First, assemble your custom AI stack.

- Set up your Mistral Medium 3 Model: Install the mistralai Python SDK, configure your API key, and create an agent object with your desired model and instructions.

- Integrate ASR and TTS: Install and configure your chosen speech recognition engine (like Whisper) and text-to-speech engine (like ElevenLabs, Piper, or PlayHT).

- Orchestrate with a Backend: Write a backend application (e.g., in Python) that orchestrates these components. This is where you will manage the conversational logic and context.

Step 2: Provision a Phone Number with FreJun

Instead of negotiating with telecom carriers, simply sign up for FreJun and instantly provision a virtual phone number. This number will be the public-facing identity for your AI agent.

Step 3: Connect Your Backend to the FreJun API

In the FreJun dashboard, configure your new number’s webhook to point to your backend’s API endpoint. This tells our platform where to send live call audio and events. Our server-side SDKs make handling this connection simple.

Step 4: Handle the Real-Time Audio Flow

When a customer dials your FreJun number, our platform answers the call and establishes a real-time audio stream to your backend. Your code will then:

- Receive the raw audio stream from FreJun.

- Pipe this audio to your ASR engine to be transcribed.

- Send the transcribed text to your Mistral Medium 3 model for processing, including the relevant chat history to maintain context.

- Take the AI’s text response and send it to your TTS engine for synthesis.

- Stream the synthesized audio back to the FreJun API, which plays it to the caller with ultra-low latency.

Step 5: Deploy and Monitor Your Solution

Deploy your backend application to a scalable cloud provider, using a GPU-accelerated environment to optimize inference speed. Once live, use monitoring tools to track your bot’s performance, analyze user interactions, and continuously improve its accuracy and effectiveness.

Best Practices for a Flawless Implementation

- Utilize Persistent Conversation Objects: To manage multi-turn, context-rich dialogues, use the persistent conversation objects provided by the Mistral Agents API.

- Enable Tool Use for Dynamic Responses: For agents that need to perform real-time actions, configure them to use external tools. This is a key feature that makes AI voice agents using Mistral Medium 3 so powerful.

- Design for Human Handoff: No AI is perfect. For complex issues, design a clear path to escalate the conversation to a human agent. FreJun’s API can facilitate a seamless live call transfer.

- Secure Your API Keys: Your Mistral API key is a sensitive credential. Never expose it in client-side code. Always manage it securely on your backend using environment variables or a secret manager.

Final Thoughts

The freedom to build AI voice agents using Mistral Medium 3 and other powerful open-weight models is a revolutionary advantage. It allows you to create a truly unique and differentiated conversational AI experience. But that advantage is lost if your team gets bogged down in the complex, undifferentiated heavy lifting of building and maintaining a global voice infrastructure.

The strategic path forward is to focus your resources where they can create the most value: in the intelligence of your AI, the quality of your conversation design, and the seamless integration with your business logic. Let a specialized platform handle the phone lines.

By partnering with FreJun, you can maintain the full freedom of a custom AI stack while leveraging the reliability, scalability, and speed of an enterprise-grade voice network. You get to build the bot of your dreams, and we make sure it can answer the call.

Also Read: Enterprise Virtual Phone Solutions for B2B Communication in Thailand

Frequently Asked Questions (FAQ)

No. FreJun is a model-agnostic voice infrastructure platform. We provide the essential API that connects your application to the telephone network. This is the core of our philosophy, you have the complete freedom to build your own AI voice agents with any components you choose.

Yes. As long as your server has a publicly accessible API endpoint, you can connect it to FreJun’s platform. This is a great way to combine the performance and privacy of a local deployment with the global reach of our network.

The key difference is control and flexibility. All-in-one builders often bundle the AI logic and the communication channels together, which can limit your choice of models and your ability to customize. The Mistral Medium 3 + FreJun approach gives you the ultimate freedom to use a model of your choice, choose your own components, and build a truly custom solution that you own and control.

Yes. FreJun’s API provides full, programmatic control over the call lifecycle, including the ability to initiate outbound calls. This allows you to use your custom-built bot for proactive use cases like automated reminders or lead qualification campaigns.