A new generation of powerful, context-aware models is reshaping the landscape of conversational AI. Amazon has redefined the game with its latest offering, enabling developers to build sophisticated AI voice agents using Amazon Alexa LLM. This isn’t just an upgrade to the familiar Alexa; it’s a quantum leap forward. With advanced reasoning, contextual speech recognition, and the ability to perform complex, multi-step tasks, this technology provides the “brain” for a new class of intelligent, human-like assistants.

Table of contents

- What Makes the Amazon Alexa LLM a Game-Changer for Voice?

- The Hidden Challenge: An AI Trapped in an Ecosystem

- FreJun: The API That Connects Your Alexa LLM to the World

- Alexa-Only Agent vs. Omnichannel Agent: A Head-to-Head Comparison

- A Step-by-Step Guide: How to Build a Complete AI Voice Agent

- Best Practices for a Flawless Implementation

- Final Thoughts: Your AI is Brilliant. Make Sure It Can Answer the Call

- Frequently Asked Questions (FAQ)

What Makes the Amazon Alexa LLM a Game-Changer for Voice?

Building AI voice agents using Amazon Alexa LLM offers a distinct advantage over piecing together separate components. Amazon has created a deeply integrated ecosystem where the different parts of a conversation work in harmony. Key features include:

- Contextual Speech Recognition: Alexa’s Automatic Speech Recognition (ASR) system isn’t static. It dynamically adapts based on user and device context, leading to higher accuracy and a more natural understanding of what’s being said.

- LLM-Powered Reasoning: At its core, the Alexa LLM is capable of complex, multi-turn dialogues. It can manage conversational state, understand nuanced requests, and generate context-aware responses.

- Advanced Function Calling: This is the key to making an AI agent actionable. The Alexa AI Action SDK allows the bot to directly integrate with your backend APIs. This enables it to perform real-world tasks like booking a flight, reserving a hotel, and providing personalized recommendations based on live data.

The Hidden Challenge: An AI Trapped in an Ecosystem

You have designed a brilliant agent. It’s powered by the Alexa LLM, it’s connected to your business systems via function calling, and it’s ready to revolutionize your customer experience. Now, you need it to answer a phone call from a customer. This is where most projects hit a formidable wall.

The entire Alexa ecosystem operates within Amazon’s “walled garden.” You can access your agent through an Echo speaker, the Alexa mobile app, or other Alexa-enabled devices. However, it cannot natively answer a standard phone call on the Public Switched Telephone Network (PSTN).

This is a massive limitation for any serious business application. Your highest-value customers, your less tech-savvy users, and anyone needing urgent, hands-free support will default to the most trusted communication channel available: the telephone. If your intelligent agent can’t be reached there, it’s failing at its primary job. To solve this, you would have to build your own complex and costly telephony infrastructure, a task that is a massive distraction from building a great AI.

FreJun: The API That Connects Your Alexa LLM to the World

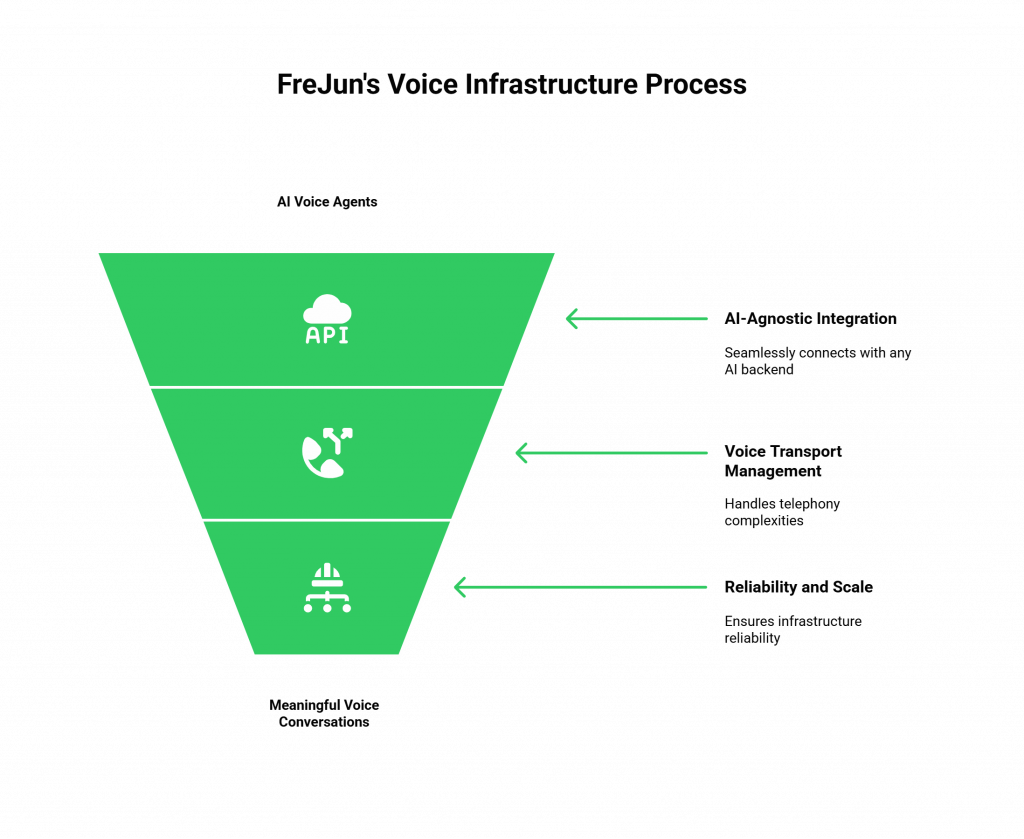

This is the exact problem FreJun was built to solve. We are not another AI platform. We are the specialized voice infrastructure layer that connects the powerful AI voice agents using Amazon Alexa LLM to the global telephone network.

FreJun provide a simple, developer-first API that handles all the complexities of telephony, so you can focus on building the best AI possible.

- We are AI-Agnostic: You bring your own “brain.” FreJun integrates seamlessly with any backend, allowing you to connect directly to the Alexa LLM API.

- We Manage the Voice Transport: We handle the phone numbers, the SIP trunks, the media servers, and the low-latency audio streaming.

- We Guarantee Reliability and Scale: Our globally distributed, enterprise-grade infrastructure ensures your phone line is always online and ready to handle high call volumes.

FreJun provides the robust “body” that allows your AI “brain” to have a real, meaningful conversation with the outside world via the telephone.

Pro Tip: Leverage Contextual Understanding for Personalization

One of the greatest strengths of AI voice agents using Amazon Alexa LLM is their ability to understand context. When you connect this to a phone line with FreJun, you can supercharge this capability. FreJun provides the caller’s phone number to your backend. Your application can use this to look up the customer in your CRM, retrieve their entire history, and pass that context to the Alexa LLM at the start of the call, enabling a deeply personalized and efficient interaction from the very first word.

Alexa-Only Agent vs. Omnichannel Agent: A Head-to-Head Comparison

| Feature | The Alexa-Only Agent | The Omnichannel Agent (Alexa + FreJun) |

| Accessibility | Limited to users with Alexa-enabled devices or the Alexa app. | Universally accessible to anyone with a phone, plus all Alexa channels. |

| Use Cases | Smart home control, personal reminders, in-app tasks. | 24/7 call centers, virtual receptionists, automated phone orders, critical incident support. |

| Infrastructure Burden | Low. Managed within the Amazon ecosystem. | Zero telephony infrastructure to build. FreJun manages the entire voice stack. |

| Business Impact | A modern feature for a segment of tech-savvy users. | A strategic asset that reduces operational costs and serves all customer segments. |

| Customer Journey | Fragmented. A user must switch from a phone call to an app to get automated help. | Unified. A user can interact with the same intelligent assistant across all channels. |

A Step-by-Step Guide: How to Build a Complete AI Voice Agent

This guide outlines the modern, scalable architecture for building AI voice agents using Amazon Alexa LLM that can handle real phone calls.

Step 1: Set Up Your AI Core with the Alexa AI Action SDK

First, get an Amazon Developer account and obtain the necessary credentials. Use the Alexa AI Action SDK to design the core logic of your agent. This is where you will define its personality, its conversational flows, and, most importantly, the tools it can use via function calling to connect to your backend APIs.

Step 2: Architect Your Backend Application

Using your preferred framework (like Python with FastAPI or Node.js with Express), build a backend service that will orchestrate the conversation. This service will be the central hub that communicates with both FreJun and the Alexa LLM.

Step 3: Integrate FreJun for the Voice Channel

This is the critical step that connects your agent to the telephone network.

- Sign up for FreJun and instantly provision a virtual phone number.

- Use FreJun’s server-side SDK in your backend to handle incoming WebSocket connections from our platform.

- In the FreJun dashboard, configure your new number’s webhook to point to your backend’s API endpoint.

Step 4: Implement the Real-Time Conversational Flow

When a customer dials your FreJun number, your backend will spring into action:

- FreJun establishes a WebSocket connection and streams the live audio to your backend.

- Your backend receives the raw audio stream and forwards it to the Alexa ASR and LLM via the AI Action SDK.

- The Alexa LLM processes the audio, understands the intent, and executes any necessary function calls by communicating with your backend.

- The Alexa LLM returns a text response to your backend.

- Your backend sends this text response to Alexa’s TTS service to be synthesized into audio.

- Your backend streams the synthesized audio back to the FreJun API, which plays it to the caller with ultra-low latency.

With this architecture, you have a complete, enterprise-ready AI voice agents using Amazon Alexa LLM.

Best Practices for a Flawless Implementation

- Design for Graceful Failure: No AI is perfect. Program clear fallback paths in your conversational logic and design a seamless handoff to a human agent when the bot gets stuck. FreJun’s API can facilitate this live call transfer.

- Ensure Security and Privacy: Manage all API keys and user data securely. Encrypt all communication and ensure your data handling practices comply with all relevant privacy regulations.

- Continuously Monitor and Iterate: Use call analytics and conversation logs to understand how users are interacting with your agent. This data is invaluable for refining its instructions, improving its tool usage, and enhancing the overall user experience.

Final Thoughts: Your AI is Brilliant. Make Sure It Can Answer the Call

The power of AI voice agents using Amazon Alexa LLM is undeniable. It represents a paradigm shift in our ability to create intelligent, helpful, and truly conversational AI. But that intelligence is only as valuable as its accessibility. A brilliant AI that is trapped within a single ecosystem cannot solve real-world business problems at scale.

The strategic path forward is to combine the best AI brain with the best voice infrastructure. By leveraging a specialized platform like FreJun, you can offload the immense burden of telecom engineering and focus your valuable resources on what truly differentiates your business: the intelligence of your AI and the quality of the customer experience you deliver.

Build an agent that’s as smart as Alexa, and let us give it a voice that can reach the world.

Further Reading –How to Deploy a Voice Bot AI Using Simple APIs

Frequently Asked Questions (FAQ)

No, it integrates with it. You use the Alexa AI Action SDK to build your agent’s “brain” its intelligence, conversational logic, and function calling capabilities. FreJun provides the separate, essential voice infrastructure that connects that brain to the telephone network.

Yes. FreJun is designed to stream the raw, unprocessed audio from the phone call to your backend. You can then forward this raw audio to the Alexa ASR for transcription and send your text response to the Alexa TTS for synthesis, allowing you to leverage the full power of the Amazon ecosystem.

Function calling is managed by your backend. When the Alexa LLM determines that it needs to call a function, it will send a request to your backend. Your backend code will then execute the function (e.g., make a database query), send the result back to the Alexa LLM, and then the LLM will use that result to formulate its final response.

Absolutely not. We abstract away all the complexity of telephony. If you can work with a standard backend API and a WebSocket, you have all the skills needed to build powerful AI voice agents using Amazon Alexa LLM.